More Metrics Insights- Really? Haven’t we had enough?

More Metrics Insights- Really? Haven’t we had enough?

I’ve been following all the “buzz” over the past week from #SXSW and now #eMetrics regarding the development, reporting and use of “metrics” in Social Media (SM) space. Quite interesting dialogue to say the least.

For some of you, particularly those who don’t live and breath Social Media, all of this may have turned into “white noise”, as this weekend appears to have exhausted just about every angle on the subject of SM metrics that we could possibly explore. But fear not! As another week kicks into gear, there will no doubt emerge a new wave of posts and blogs on the very same topic.

But I must admit, that all of this “metrics talk” does strike a chord with me. After all, having spent nearly two decades in helping company leaders and managers get their arms around business metrics, and the broader discipline of performance management, you would expect my ears to jump up at the word “metrics”! (I know…sad but true). And while SM is not an area I have spent an enormous amount of time studying or participating in from “the inside”, I am finding that many of the same principles I use with my “corporate clients” and very much “in play” for this new and ever evolving market.

Stepping outside my “sandbox” …

While my life does not revolve around advances in SM, I have become what one might call a “steady user” of it. From my evening “blogging” hour, to ongoing “check-ins” via Twitter, Facebook and LinkedIn; I would confess to spending at least 10-15% of my ‘awake time’ interacting with online friends and colleagues.

Of course, like many of you, Social Media (which for me includes my morning time with my Pulse reader scanning news and blogs that I monitor) has replaced the time I spend reading newspapers, magazines and “industry rags” (in fact it’s become much a more efficient medium saving me lots of time and energy). And those ongoing “check ins” that I initiate, usually occur when I am either ” restricted” (cabs, airports, etc.), between tasks, or otherwise indisposed (I won’t elaborate on the latter- you get the idea).But the “blogging hour”… that is something separate for me, and while I do enjoy it and it helps me unwind, I also recognize it for what it is- a personal and professional investment in my own development. So yes, you could say that SM should be important simply because of the 15% percent of my day that I rely on it for.

But for me, it goes a little beyond that, especially now that the conversation has turned to metrics, and the broader issue of managing SM performance and results. Ever since I got into the Performance Management discipline years ago, I’ve been a strong believer and proponent of finding ideas and insights, wherever they occur (different companies, different industries, different geographies, etc.), and applying insights to current challenges within our own environments. Some would call this “benchmarking”. Others may call it good learning practices. For me, it’s not only common business sense, but a core set of principles that I live and manage by. And for many like me, it is the basis of any good Performance Management system.

So it’s only natural for me to observe what’s going on in this space and try to open some good “cross dialogue” on how we can lift the overall cause that I know we all are pursuing: More effective measurement, better management of performance, and stronger results.

Exploring “Best Practices” In SM Performance Measurement…

A few weeks back I published a post on what businesses outside of SM space could learn from what is happening within SM. Many of you found that useful, although I must admit that it was the first time that I really began experimenting with what was available out there in terms of thinking andtools. But rather than focusing on the tools, I tried to explore some of the bigger themes that were emerging in terms of practices and approaches, and attempted to determine which aspects of that thinking in SM might be be “import-able” by other sectors as “lessons learned”.

Today, I want to ‘flip the tables’ a bit, and talk about what other industries can teach Social Media about the art of measuring, improving, and delivering on our individual goals and aspirations.

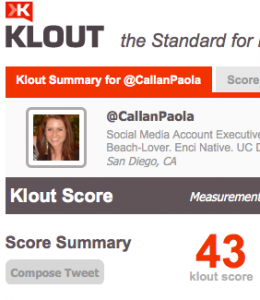

I was inspired to go this direction by a number of posts over the weekend that appeared to delve into the same question (here’s an example regarding the measurement issues with Klout) When I read that, it sounded like some good stuff, I realized that this was really the tip of the iceberg on a really important issue. So expanding on this seemed to be the next logical step.

So what Can SM Learn From Others?

The below observations are based on merely a snapshot of what I see taking place now, and fully realize that dialogue is occurring at this very minute in certain hotel bars and restaurants on this very topic. My goal is not to suggest an exhaustive list of “fix it now’s”, but rather to open an ongoing dialogue on what we can learn and apply in our individual areas of expertise.

Is what we’re measuring today meaningful?

Is what we’re measuring today meaningful?

OK, let’s get some basics out of the way, at the risk of boring (or offending) some of the social media pundits and ‘real experts’ out there. For most users (consumers of Social Media)- the everyday user of Facebook, LinkedIn, and Twitter, for example- the answer to ‘whether or not SM measures are meaningful ?’ is “probably not”. Save for ego and vanity, measurement of things like the number of “digital friends” (Facebook friend counts and Twitter followers for example) mean very little to the nature of managing meaningful relationships- whether it is in maintaining existing ones, or growing new ones. Meaningful relationships go way beyond these surface level statistics.

Of course, there are those individuals and businesses who do use, and rely heavily on, more in depth statistics for tracking their progress. So I believe at least some of them would say “yes- meaningful…but with a lot left to be desired”. The stats and measures are there. Are they meaningful and value adding to the business? Subject to debate.

What we can be certain of, is that things like Follower counts, Klout scores, Retweets, and Click-through’s are measures that are becoming less and less valuable, and that there is a deep yearning for more. Whether this takes the form of refining what’s in the algorithms and “black boxes” , or a major rethinking of the metrics themselves (which would be my vote), still appears to be a subject of great debate.

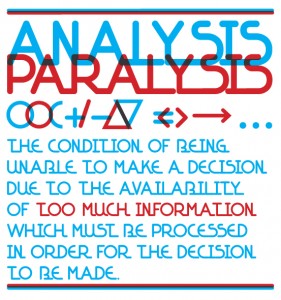

When you walk into a large company that “manages by the numbers” (and trust me, many don’t), you see that there are literally hundreds, if not thousands of things they are tracking. Some are real meaningful, and some are as useless as an “asshole on your elbow” (I heard that one from a old (and wise) plant manager in Texas, and have been waiting months to use it- hope I didn’t offend

When I see that level of measurement/ quantity of metrics, a little “warning sound” goes off in my head and I start exploring the question: “For the sake of what?” are you measuring this or that? I use a variety of techniques to get them to tell me how they are going to use a certain metric (most often the question of “why?” asked repetitively works best), but often the question is rhetorical because there is no answer. I once heard someone say, “If you want to see if information is valuable, just stop sending out the report and see if anyone screams!”.

Fact is, if a measure doesn’t have a causal link to some major result area, or worse, if the person managing it cannot see that link, the metric serves no purpose other than to consume cost. Most of the tools I see in SM space for tracking metrics simply report stats with no obvious linkage to any real outcome. Even if something like # followers was important (and we all know that most often it ranks pretty low), there is no clear path evident in the reports on how the stats actually impact an outcome that is important to the user (other than loose descriptions and definitions at best).

Yet, we all know that the tools and models for establishing those linkages exist everywhere. Just look at some of the basic tools used by stock traders. While they are not perfect by a long shot, “technicals” like Stochastics, Bollinger Bands, and simple breakout patterns, have clear paths to a high probability event or outcome, yet are available to even the most amateur investor. Even “stogy” old Utility companies can draw connections between things like permit rates, new connection activity and downstream staffing requirements. I’m not suggesting it’s easy, just that it’s important and that the tools are there to execute and simplify.

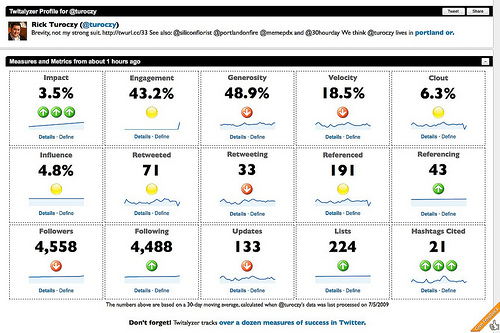

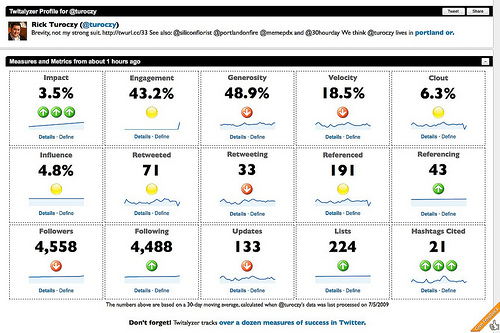

For me, this is the MOST IMPORTANT item on the list. Most of us have seen the Klout site, Twitalyzer, and the myriad of other tools out there to support the development of personal networks. These tools are extremely useful, and possess a wealth of information if you have the time and stamina to think about what it all means. I mean, come on… to have 25 metrics on one page with trends only one click away is something that a real metrics guy can only look at and say “WAY COOL”. Seriously, very cool! That’s the good news.

The bad news is that it’s the same news for everyone. But we all know the dangers of “one size fits all”. I’m not diminishing the value these tools provide. SM would be lost without them. And in their defense, certain sites like Twitalyzer and Klout have gone beyond the simple dashboards and have incorporated categories that many aspire to, and have begun to draw some connection between these aspirations and those broad categories.

The bad news is that it’s the same news for everyone. But we all know the dangers of “one size fits all”. I’m not diminishing the value these tools provide. SM would be lost without them. And in their defense, certain sites like Twitalyzer and Klout have gone beyond the simple dashboards and have incorporated categories that many aspire to, and have begun to draw some connection between these aspirations and those broad categories.

But it’s just a start (I mean come on…Are any Twitter users actually aspiring to be “social butterflies”? (ok, don’t answer that, because they’re probably some who do!) Perhaps a better question is whether a “social butterfly ” would ever aspire to be a “thought leader” ? My point is that it’s probably not a linear sequence of development, and while these categories get us one step closer to aligning measures with goals, they are still missing 2 things:

1) Better understanding of the goals of users (its probably more than 4 and less than 100) and

2) a guidance system that helps one use the metrics to achieve those goals.

So here’s a thought…What about a simple interface that allows you to pick a goal, and then tells you which metrics you should care about and what the target should be to accelerate within that goal class? You’d be building a model that would clearly feed on itself. I’d be surprised if the BI guru’s out there don’t already have this built into their corporate BI suites and Web Analytics tools, but it would seem to me to be a great draw for the myriad of other users with goals that extend beyond butterflies and mindless follower counts.

Find out what’s important, at as customized a level as you can (and is practical), and tell us how to get there. That’s the “holy grail” in every business, and what every CEO is and Executive is craving from its performance management process – “I’ll tell you what my strategic goal/ ambition is,…and you tell me what the metrics AND targets are that will help me get there,… and then help me track my progress!”

- Can tools help? (and how?)

Absolutely and without question, the answer is yes. But just as other businesses and industries have jumped too quickly, often placing ‘technology before process’, so has SM in my view. Part of this is because of how the industry is “wired”, and how it has evolved. Born through technology, and managed and staffed with a heavy technology bent, it’s not surprising that we’ve reached a point where the data has become king, UI’s have a lot to be desired.

I’m not talking about the ease of navigation, the placement of charts, or the rendering of drill down information. I’m talking about how the user (the customer) thinks…starting with their goals, and accessing the relevant metrics to show progress and critical actions they need to take to improve. I suspect the developer who can “visualize” (to use an overused term in today’s SM environment) that kind of “line of sight” will ultimately win the hearts of its users.

The other role technology can play is enabling the algorithms and models that are required to deploy the kind of “mass customized”/ goals oriented solution I described above. Without these tools, the likelihood of being able to normalize, analyze and model these relationships would be impossible. So in my view, the tools are critical, but the effort first needs to be on the process (getting the line of sight understood) and then working the raw data in a way that renders it in a context-specific visualization. That’s in a perfect world- but it’s still a good aspiration.

Like I said, these are just the things that are ‘top of mind’ for me at the moment, and only informed by the lens through which “Bob” is looking. I’m sure some of these issues are top of mind for you too, and you may actually be unveiling (right now) that new “holy grail” subscription site that has the answers. If so, great…I may be your perfect customer. But if the last two decades have taught me anything, it is that different perspectives and different lenses often pose new questions and spark new crystal balling that lifts the entire game.

Of course I welcome any comments and expansion on the above list. As I said earlier, this is just the beginning of my own thinking, inspired in part by some of yours. I look forward to more of yours!

PS- For anyone who is interested in Performance Management and Metrics topics outside of the world of SM, feel free to bookmark http://EPMEdge.com

Links to some of my more recent posts on these subjects are provided below

Incorporating the principle of “line of sight” into your performance measurement and management program

Managing through the “rear view mirror”- a dangerous path for any business

Data, information and metrics: Are we better off than we were 4 years ago?

-b

Author: Bob Champagne is Managing Partner of onVector Consulting Group, a privately held international management consulting organization specializing in the design and deployment of Performance Management tools, systems, and solutions. Bob has over 25 years of Performance Management experience and has consulted with hundreds of companies across numerous industries and geographies. Bob can be contacted at bob.champagne@onvectorconsulting.com

An end-to-end approach for managing customer experience strategy and delivering on its promises...

An end-to-end approach for managing customer experience strategy and delivering on its promises...

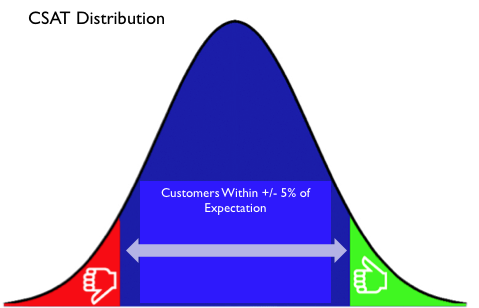

While they were few and far between, there were failures and successes on “the fringes” of the distribution. I’ve shown a simple example of what I mean, although I believe actual research on a broader set of experiences would probably show that the distribution is anything but “normal” /gaussian (i.e. these days it is likely skewed to the left assuming customers still have some semblance of expectation (hope) of good service, which of course, is debatable (I’ll leave that for another post).

While they were few and far between, there were failures and successes on “the fringes” of the distribution. I’ve shown a simple example of what I mean, although I believe actual research on a broader set of experiences would probably show that the distribution is anything but “normal” /gaussian (i.e. these days it is likely skewed to the left assuming customers still have some semblance of expectation (hope) of good service, which of course, is debatable (I’ll leave that for another post).

Is what we’re measuring today meaningful?

Is what we’re measuring today meaningful?

Analysis– When many companies hear the word “analysis”, they go straight to thinking about how they can better “work the data” they have. They begin by taking their scorecard down a few layers. The word “drill down” becomes synonymous with “analysis”. However, while they each are critical activities, they play very separate roles in the process. The act of “drilling down” (slicing data between plants, operating regions, time periods, etc.) will give you some good indication where problems exist. But it is not “real analysis” that will get you very far down the path of defining root causes and ultimately bettersolutions. And often, it’s why we get stuck at this level. Continuous spinning of the “cube” gets you no closer to the solution unless you get there by accident. And that is certainly the long way home. Good analysis starts with good questions. It takes you into the generation of a hypothesis which you may test, change and retest several times. It more often than not takes you into collecting data that may not (and perhaps should not) reside in your scorecard and dashboard. It requires sampling events and testing your hypotheses. And it often involves modeling of causal factors and drivers. But it all starts with good questions. When we refer to “spending more time in the problem”, this is what we’re talking about. Not merely spinning the scorecard around its multiple dimensions to see what solutions “emerge”.

Analysis– When many companies hear the word “analysis”, they go straight to thinking about how they can better “work the data” they have. They begin by taking their scorecard down a few layers. The word “drill down” becomes synonymous with “analysis”. However, while they each are critical activities, they play very separate roles in the process. The act of “drilling down” (slicing data between plants, operating regions, time periods, etc.) will give you some good indication where problems exist. But it is not “real analysis” that will get you very far down the path of defining root causes and ultimately bettersolutions. And often, it’s why we get stuck at this level. Continuous spinning of the “cube” gets you no closer to the solution unless you get there by accident. And that is certainly the long way home. Good analysis starts with good questions. It takes you into the generation of a hypothesis which you may test, change and retest several times. It more often than not takes you into collecting data that may not (and perhaps should not) reside in your scorecard and dashboard. It requires sampling events and testing your hypotheses. And it often involves modeling of causal factors and drivers. But it all starts with good questions. When we refer to “spending more time in the problem”, this is what we’re talking about. Not merely spinning the scorecard around its multiple dimensions to see what solutions “emerge”.